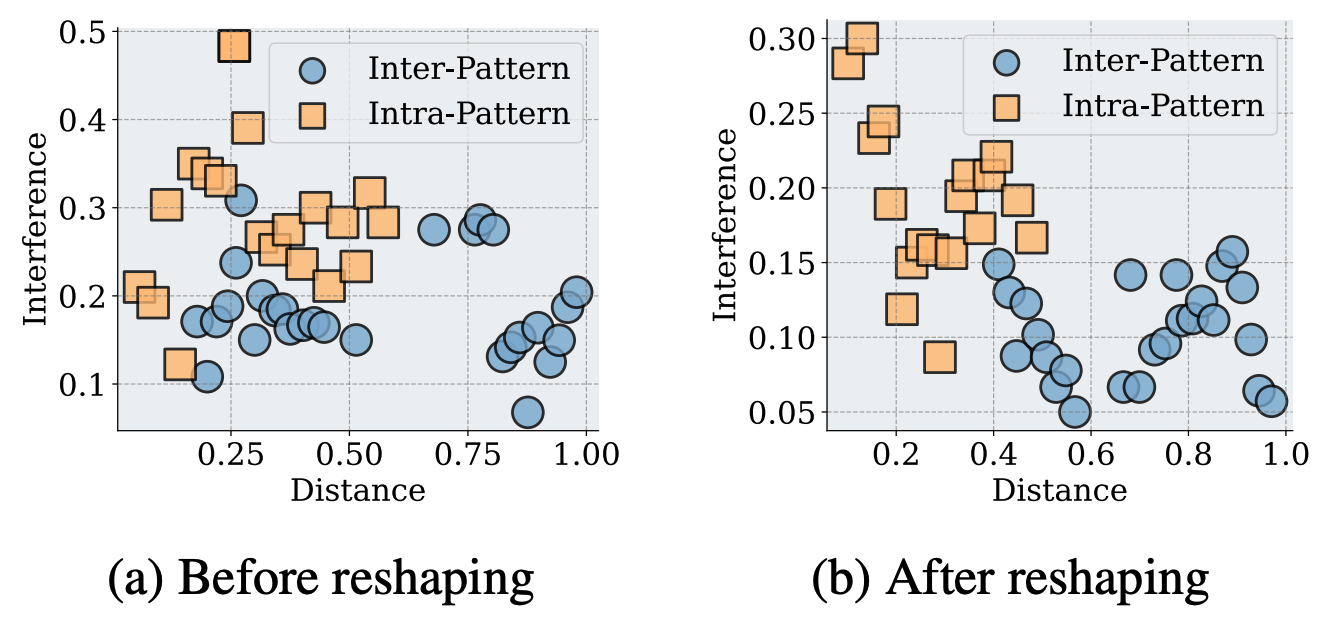

Reforming The Mechanism: Editing Reasoning Patterns In Llms With Circuit Reshaping

Zhenyu Lei, Qiong Wu, Jianxiong Dong, Yinhan He, Emily Dodwell, Yushun Dong, Jundong Li

ICLR 2026

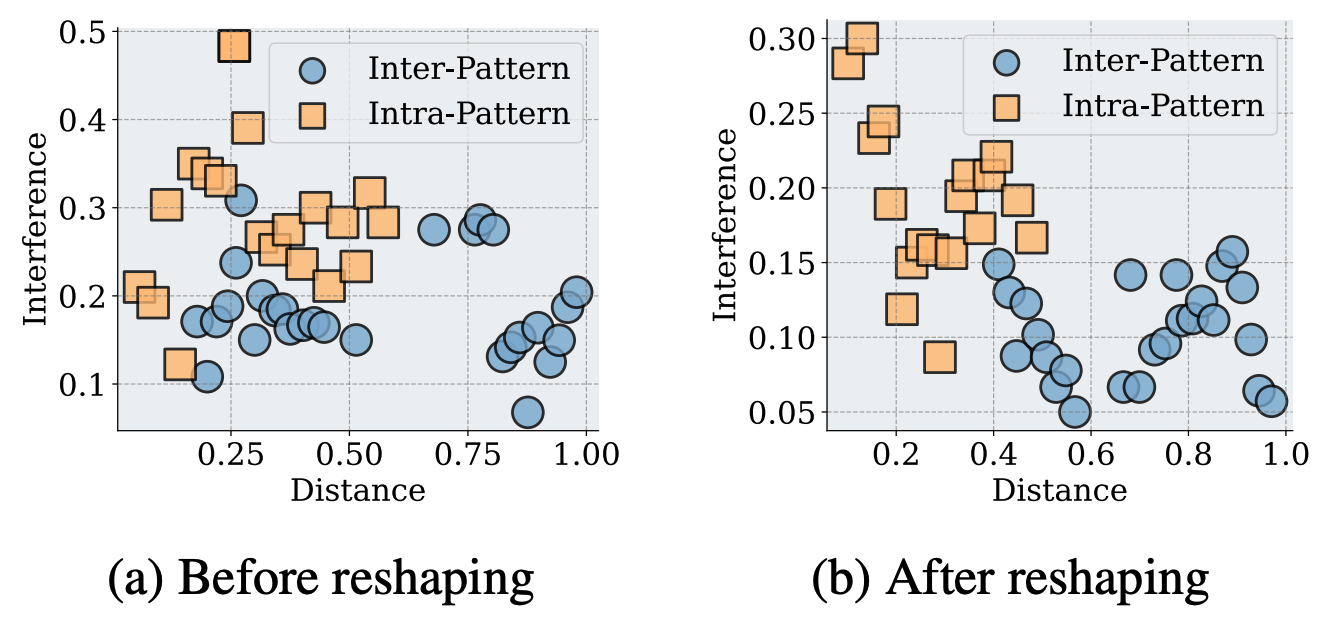

Large language models can make systematic, pattern-specific reasoning mistakes, and broad training fixes are inefficient and often disrupt other abilities. This paper introduces Reasoning Editing—targeted edits to a specific reasoning pattern—highlighting a key trade-off between generality and locality. To reduce this trade-off, we propose REdit, which reshapes underlying reasoning circuits to limit interference before applying a lightweight edit.

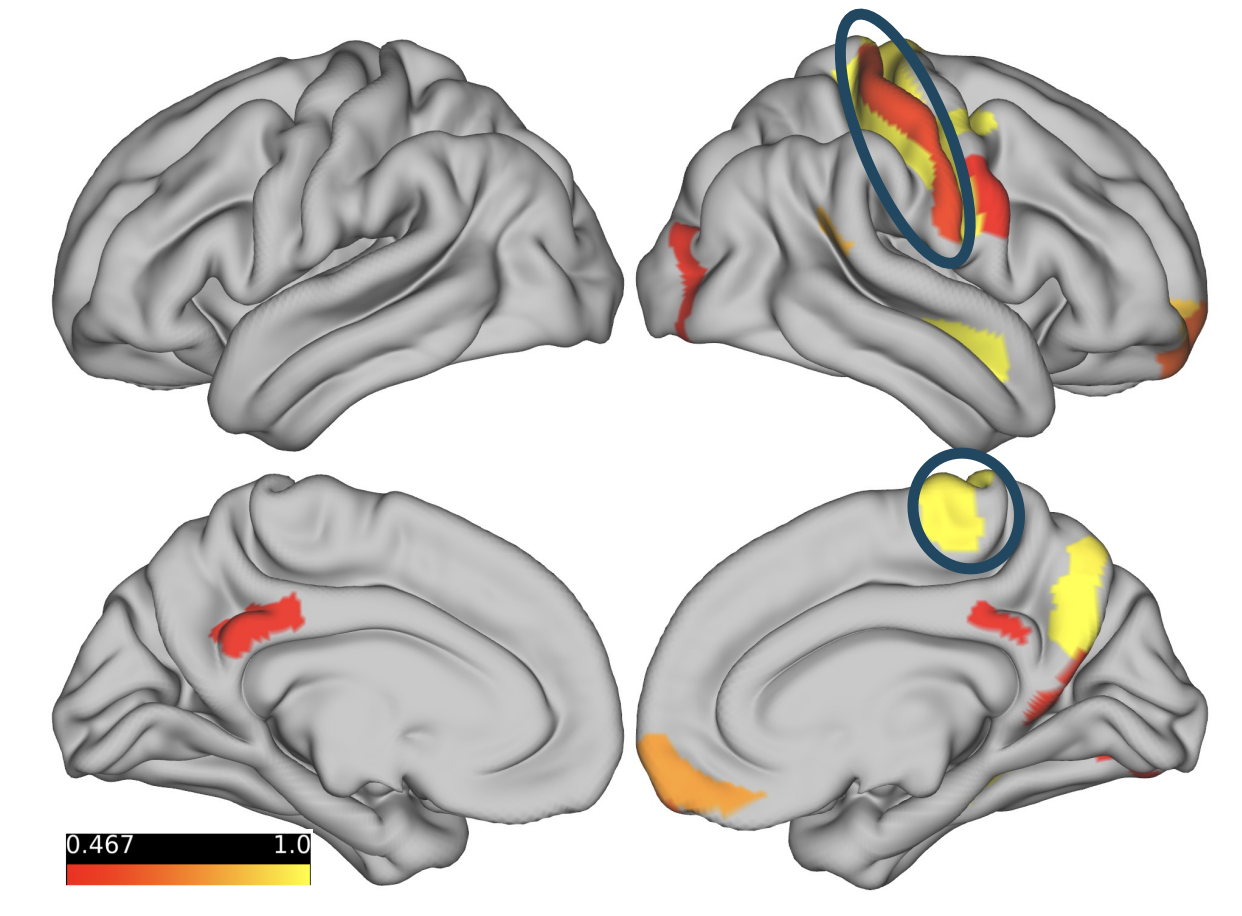

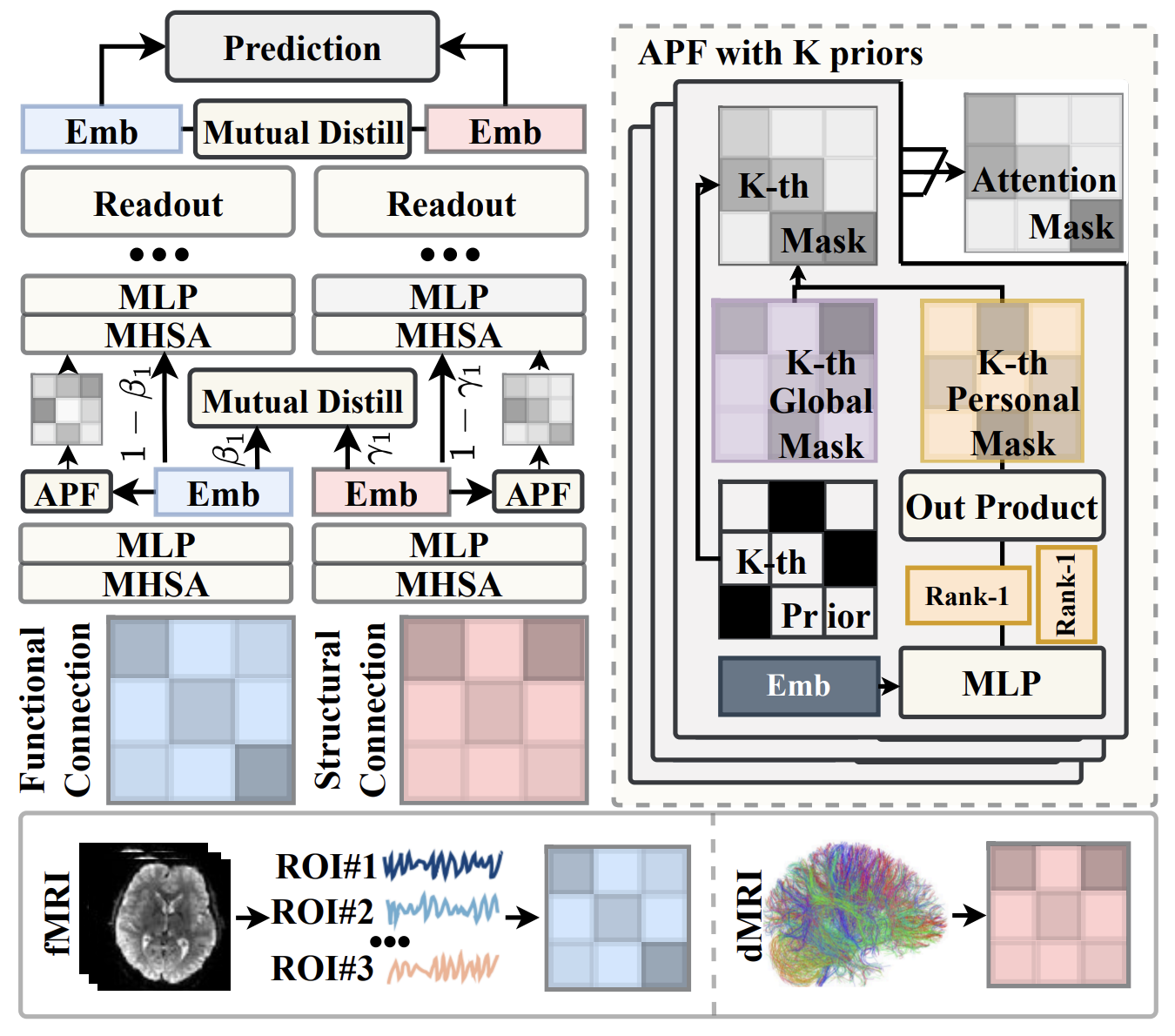

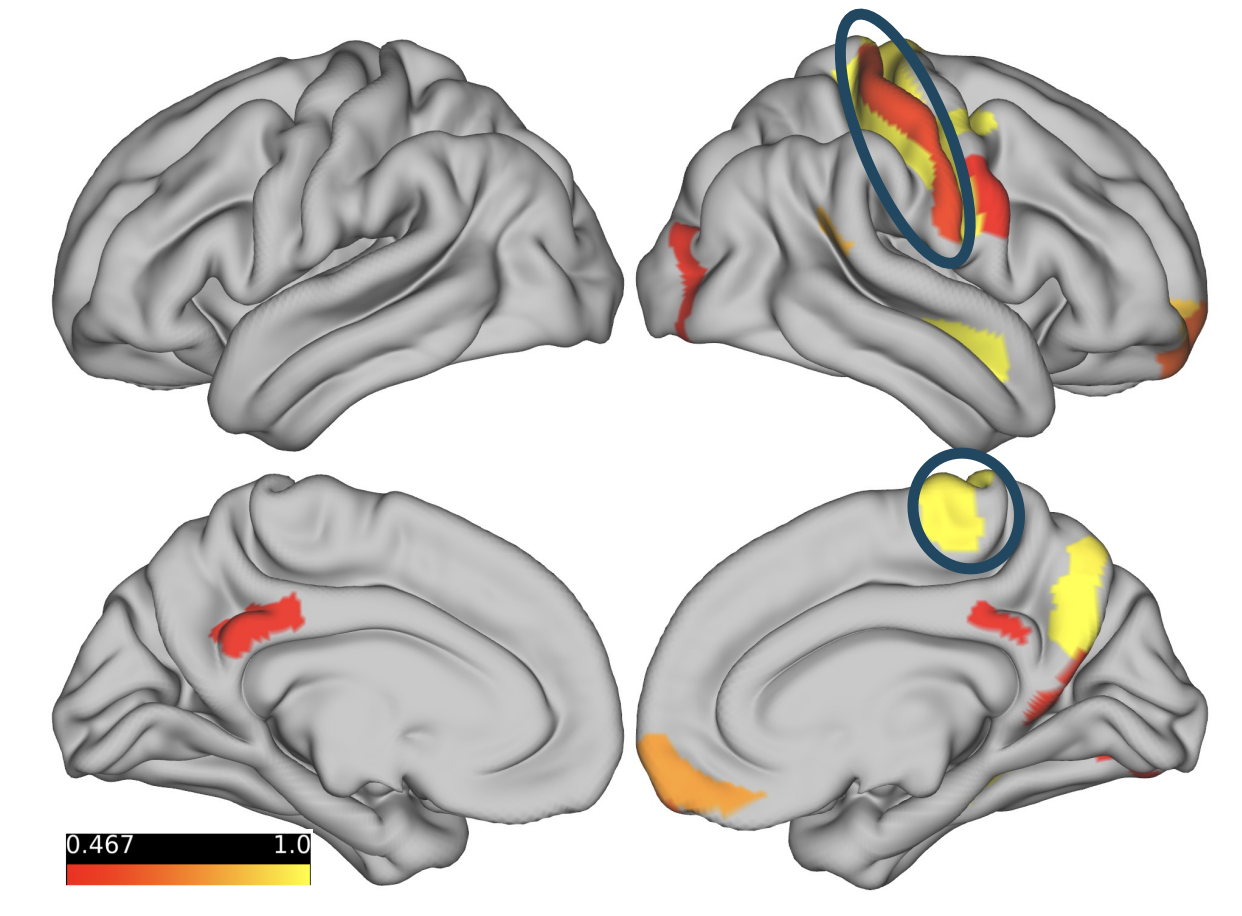

BrainTAP: Brain Disorder Prediction With Adaptive Distill And Selective Prior Integration

Zhenyu Lei, Aiying Zhang, Song Wang, Han Fan, Jundong Li

ISBI 2026

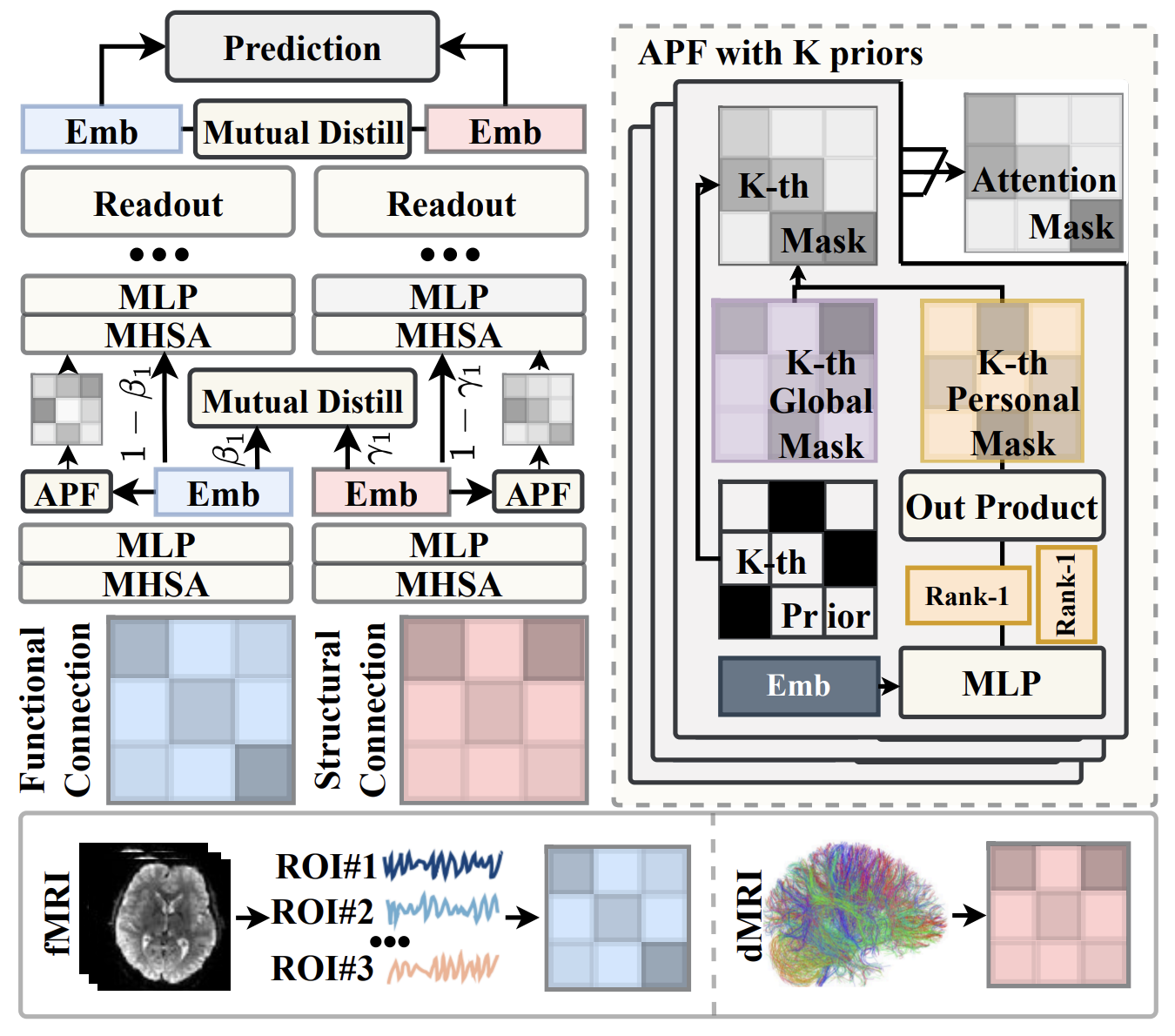

This paper introduces BrainTAP, a transformer-based framework for brain disorder prediction that integrates functional and structural connectivity while preserving their heterogeneous properties. The model employs adaptive mutual distillation to enable controlled cross-modal interaction and selectively incorporates expert-defined neurobiological priors through learnable global and personalized attention mechanisms, improving both representation quality and interpretability.

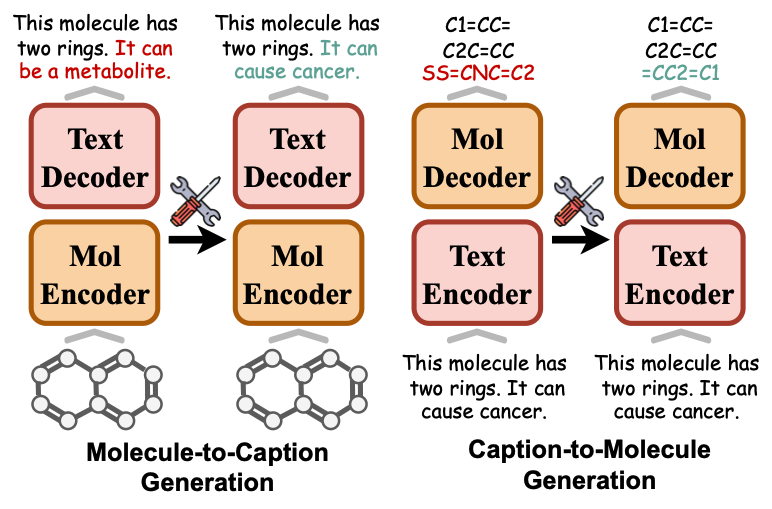

MolEdit: Knowledge Editing for Multimodal Molecule Language Models

Zhenyu Lei, Patrick Soga, Yaochen Zhu, Yinhan He, Yushun Dong, Jundong Li

WSDM 2026 (Oral)

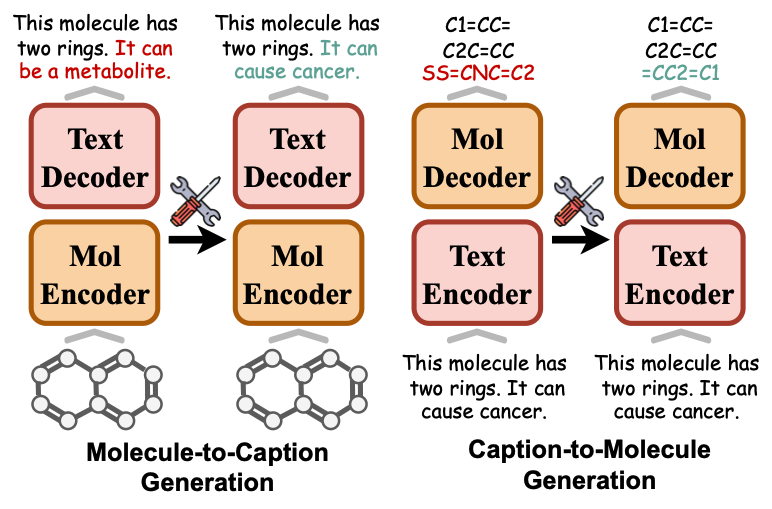

MolEdit is a framework for precisely editing facts inside multimodal molecule language models so they can fix outdated or wrong chemistry/biomed knowledge without retraining. It uses a Multi-Expert Knowledge Adapter (MEKA) to edit specific facets (e.g., functional groups, properties) and an Expertise-Aware Editing Switcher (EAES) to trigger edits only on closely matching inputs, plus MEBench to evaluate reliability, locality, and generality—showing sizable gains over prior editors.

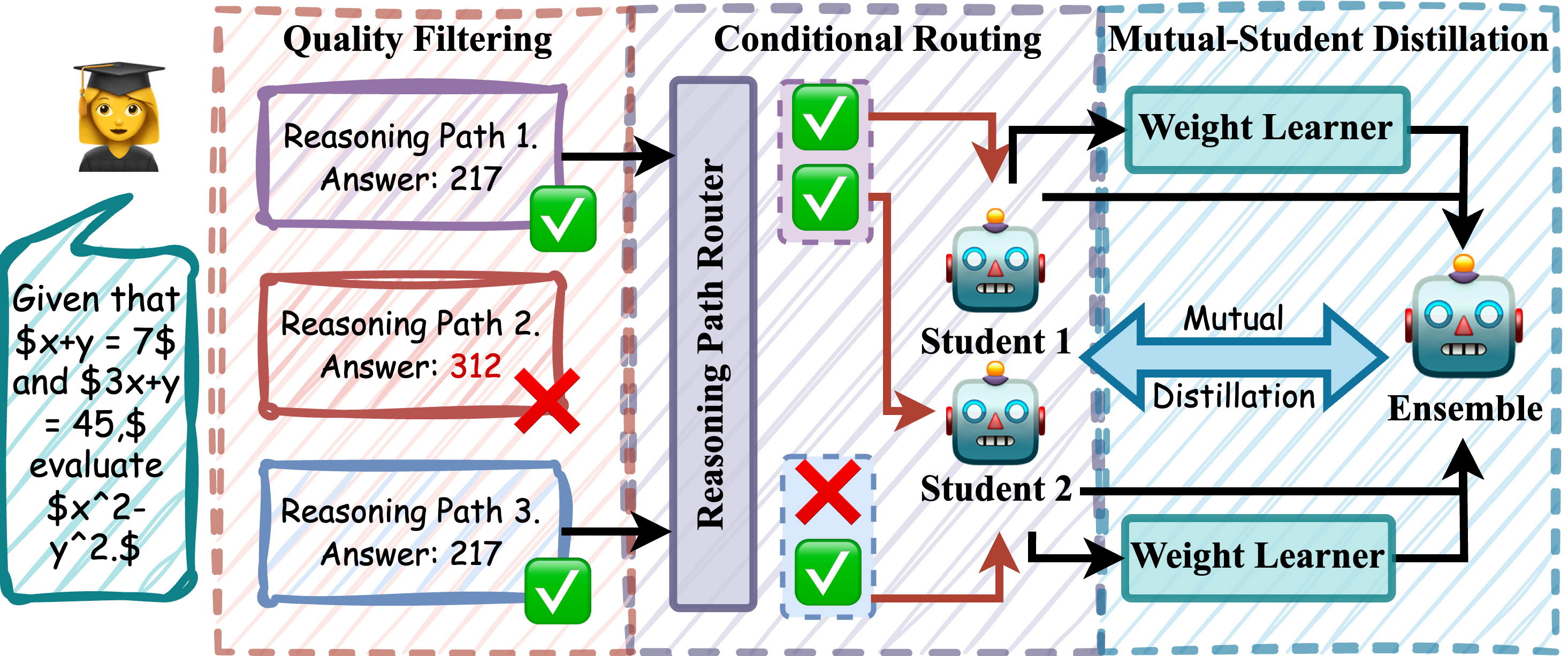

Learning from Diverse Reasoning Paths with Routing and Collaboration

Zhenyu Lei, Zhen Tan, Song Wang, Yaochen Zhu, Zihan Chen, Yushun Dong, Jundong Li

EMNLP 2025

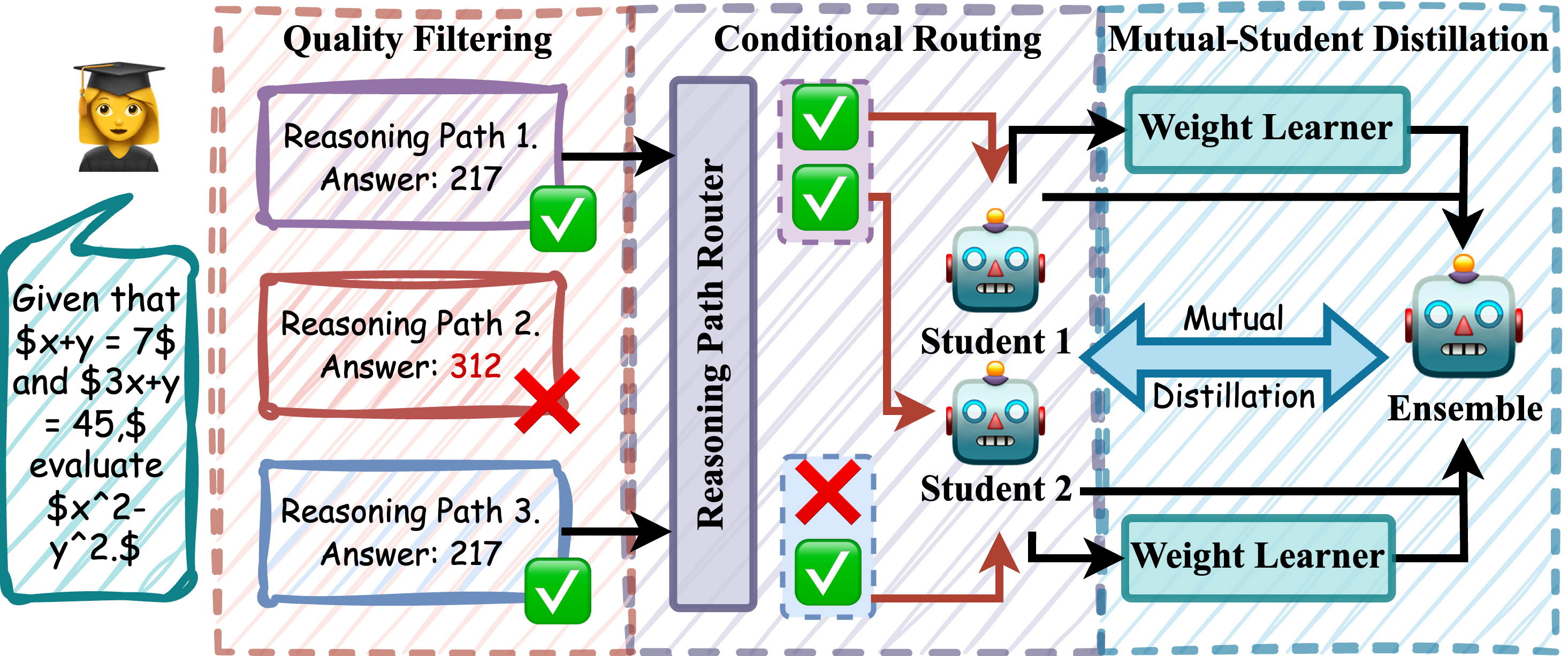

This work proposes QR-Distill, a distillation framework that (i) filters for correct, LLM-judged high-quality chains-of-thought, (ii) conditionally routes the remaining paths to students based on their current state, and (iii) enables mutual student collaboration via feature-level peer teaching. Across multiple reasoning datasets, it outperforms single- and multi-path baselines.

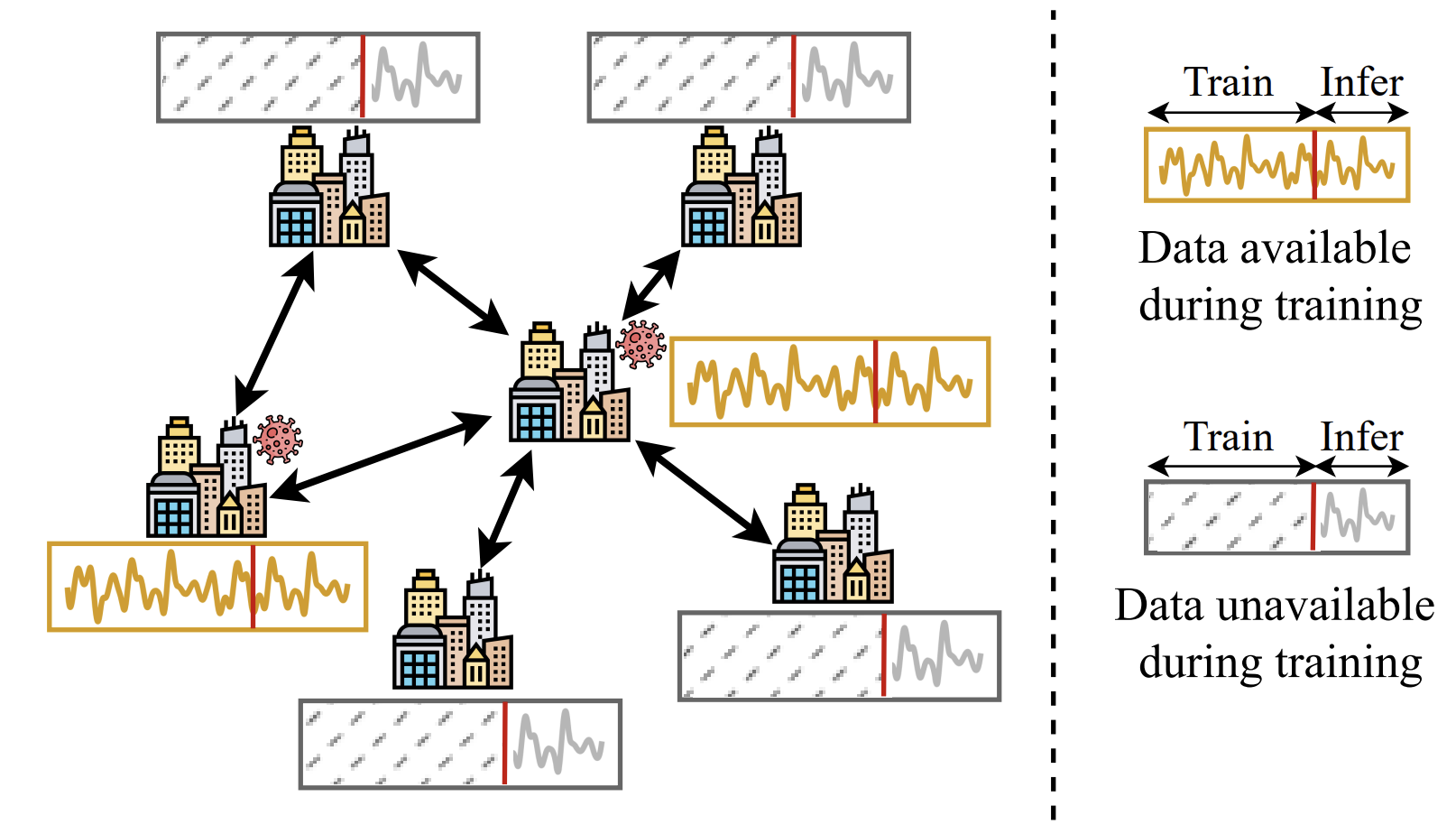

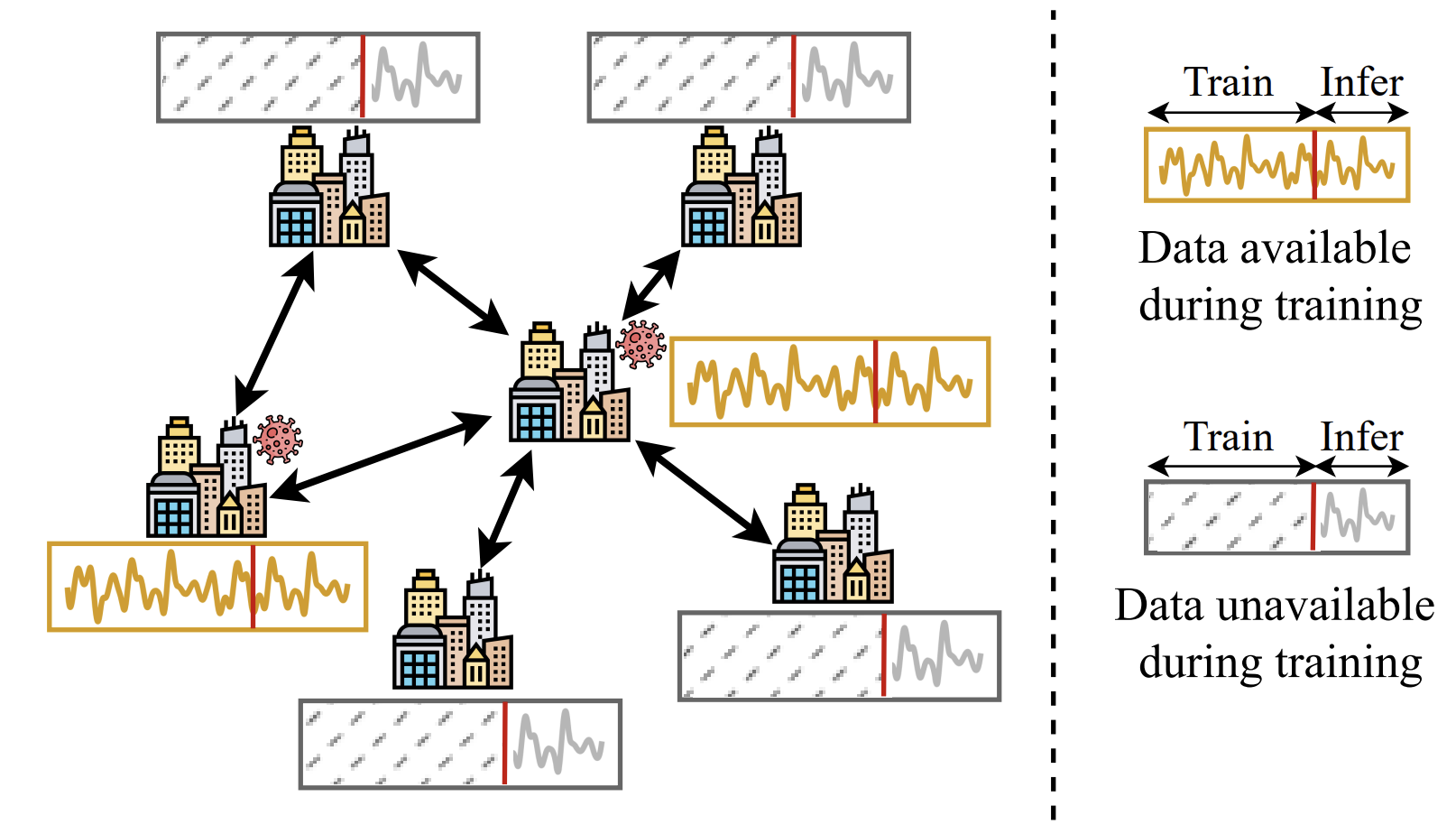

ST-FiT: Inductive Spatial-Temporal Forecasting with Limited Training Data

Zhenyu Lei, Yushun Dong, Jundong Li, Chen Chen

AAAI 2025 (Oral)

In this paper, we study an under-explored research problem of inductive forecasting with limited training data, which requires models to generalize the learned spatial-temporal dependencies from the nodes with available training temporal data to those nodes without. To handle this problem, we propose ST-FiT that can achieve superior performance without additional fine-tuning.

BrainMAP: Learning Multiple Activation Pathways in Brain Networks

Song Wang*,

Zhenyu Lei*, Zhen Tan, Jiaqi Ding, Xinyu Zhao, Yushun Dong, Guorong Wu, Tianlong Chen, Chen Chen, Aiying Zhang, Jundong Li

AAAI 2025 (Oral)

While significant progress has been made in understanding

brain activity through functional connectivity (FC) graphs,

challenges remain in effectively capturing and interpreting

the complex, long-range dependencies and multiple pathways that are inherent in these graphs. In this work, we introduce BrainMAP, a novel framework that can extract multiple

long-range activation pathways with adaptive sequentialization and pathway aggregation.

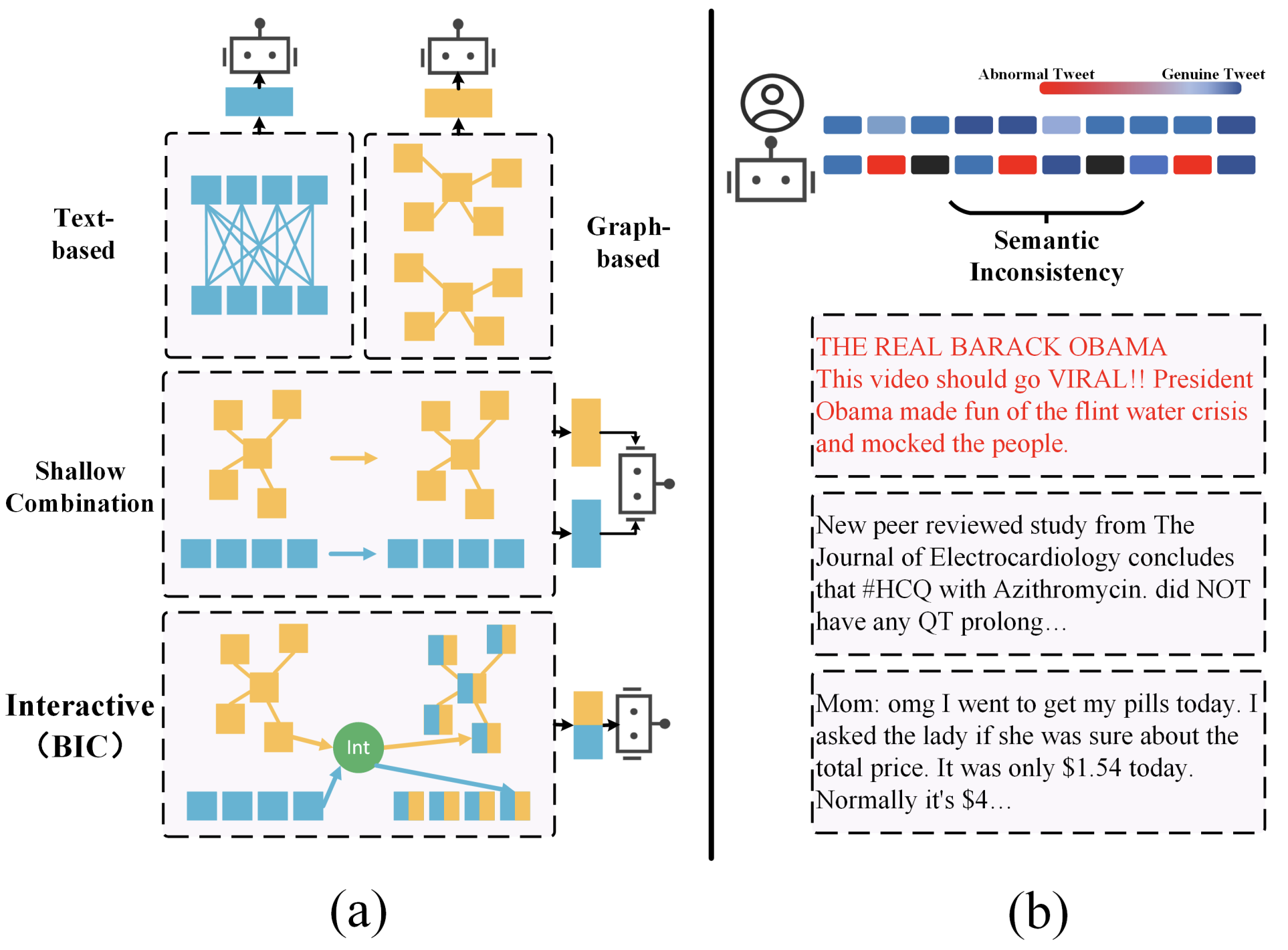

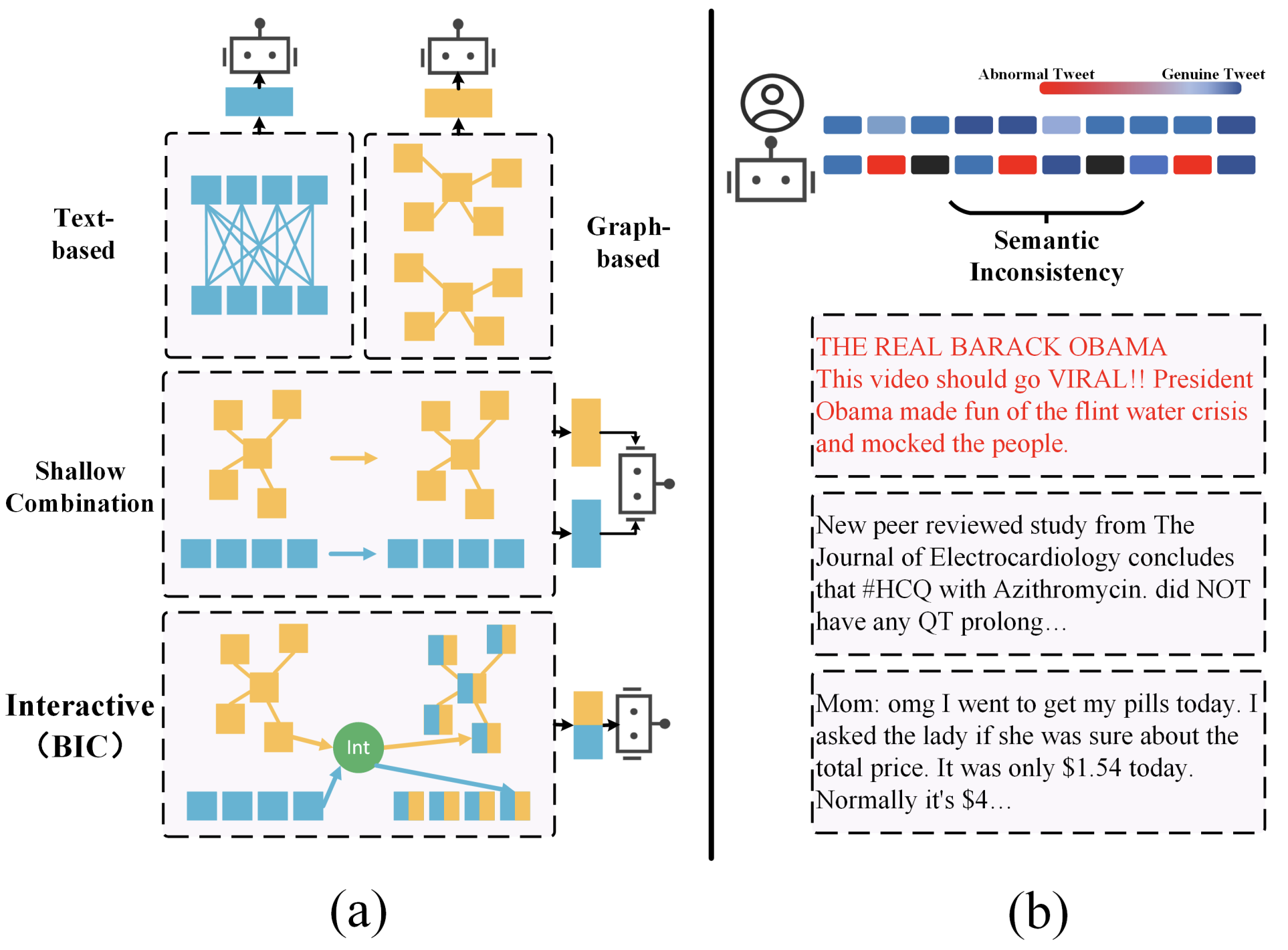

BIC: Twitter Bot Detection with Text-Graph Interaction and Semantic Consistency

Zhenyu Lei*, Herun Wan*, Wenqian Zhang, Shangbin Feng, Jundong Li, Qinghua Zheng, Minnan Luo,

ACL 2023

We proposed a bot-detection

model named BIC. BIC interacts and exchanges information across text modality and graph

modality by a text-graph interaction module. BIC

contains a semantic consistency module that derives the inconsistency from tweets by the attention

weight to identify advanced bots.